Building reliable agents starts with choosing the right model. Today, we are expanding our LLM ecosystem and making it easier to navigate.

You now have access to a broader set of models across four new integrations:

Google Gemini models: Direct support for the latest Gemini including Gemini 3.0 pro.

Helicone and OpenRouter: A wide catalog of frontier and specialized models available with consistent routing and key management.

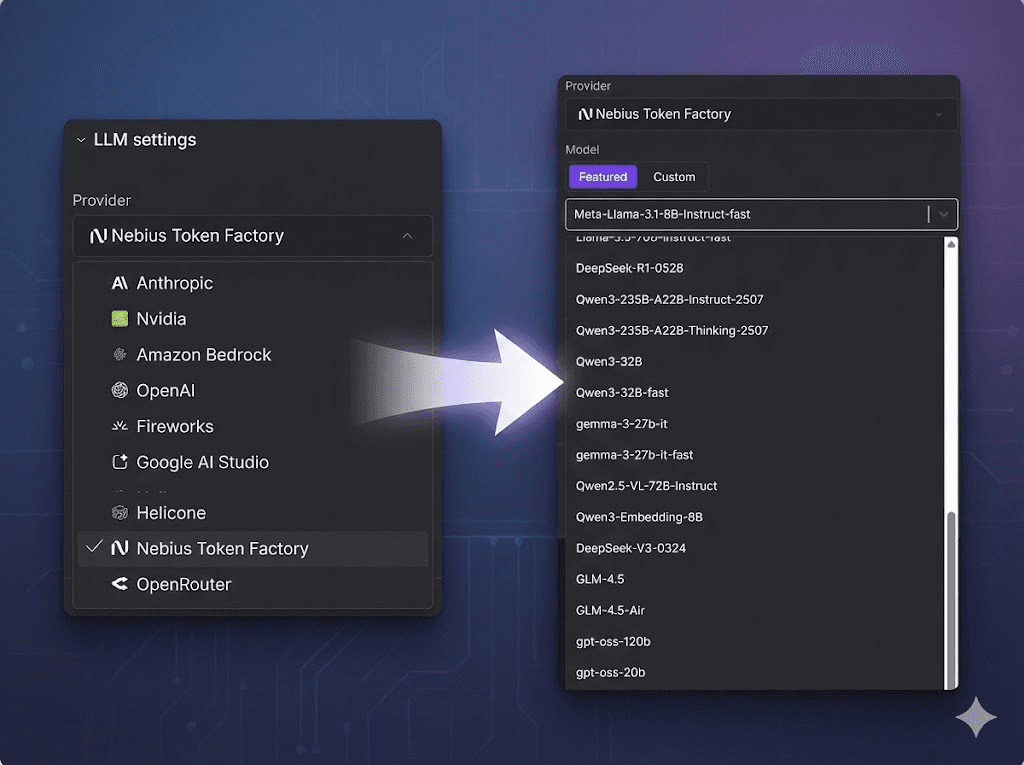

Nebius Token Factory: Aggregated access to open-weight ecosystems including Meta Llama, NVIDIA Nemotron, Qwen, DeepSeek, and GPT OSS models.

Fireworks: High-performance inference for leading open-source model families with predictable latency and strong throughput.

These additions give teams more flexibility when balancing performance, cost, and compliance without changing how you define or deploy agents.

When to consider moving from frontier models to open-source or small models

As model ecosystems mature, many workloads no longer require large frontier models. Tasks such as routing, classification, structured extraction, data cleanup, and domain-specific reasoning often run efficiently on smaller or open-source models with minimal quality loss. The advantages are practical: lower cost, shorter latency, simpler deployment options, and more control over execution environments. With our unified interface, evaluating these alternatives is straightforward since you can swap models without modifying agent logic.